Expra. Every German psychologist did an Expra during their undergrad, usually in their 2nd or 3rd semester. Expra is short…

Acknowledgments: The ideas presented here were developed over the course of many years together with my friend and colleague nemesis Johannes…

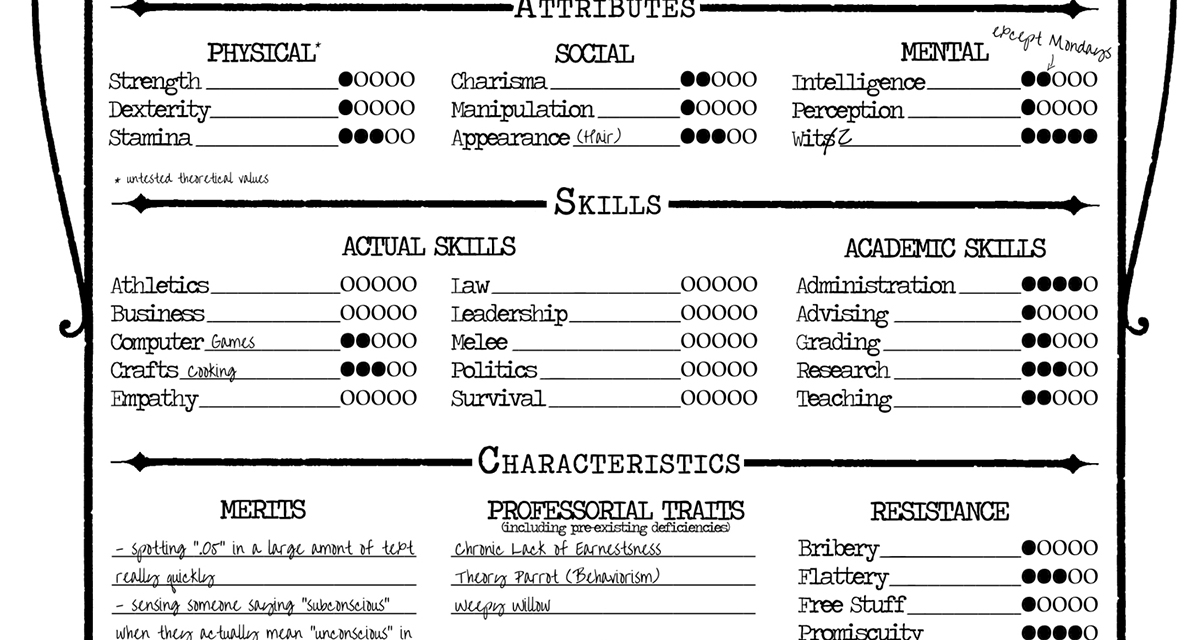

April Fool’s Post 2017 “The pursuit of knowledge is, I think, mainly actuated by love of power. And so are…

So… what is media psychology anyway? Does playing violent video games increase aggression?[1]No, but violent video game research kinda does.…

(this post was jointly written by Malte & Anne; in a perfect metaphor for academia, WordPress doesn’t know how to…

A research parasite, a destructo-critic, a second-stringer, and a methodological terrorist walk into a bar. Their collective skepticism creates a…