I don’t like getting into fights and sometimes I am concerned this keeps me from becoming a proper methods/stats person. Getting into fights about one or multiple (or all) of the following just seems to be part of the job:

- p values (the canonical point of contention)

- Bayes factors

- structural equation modeling

- instrumental variable estimation

- propensity score matching/weighting/anything

- point-and-click moderated mediation analysis

- causal inference based on observational data in general

- logistic regression

- linear probability models

- complex systems modeling

- anything involving machine learning for psychologists

- multiverse analysis

- preregistration

- etc.

I will use “statistical approaches” as a label for this collection of heterogeneous things. Including preregistration is a bit of a stretch – it is a whole workflow and the people who argue about it are not necessarily methods/stats people – but I think many of the same arguments apply, so bear with me for now.

Does it make sense to say that a statistical approach is good or bad? People certainly do pass judgment on them.

One way to evaluate statistical approaches is to think of them as potential interventions into the world (independent variable) and consider the quality of the resulting scientific inferences (the outcome). If people used Bayes factors, would science be better off?

This is a causal question, and it is an underspecified one.

First of all, causal effects involve contrasts between at least two states of the world, so we need to compare the Bayes factor world to some other world. Here, this would likely be the incumbent – mostly a p value world with some confidence intervals thrown into the mix – but unless specified, it could also be some other hypothetical world (e.g., a world in which a lot more people subscribe to the not-so-new new statistics of effect size estimation).

Then, we need to think about the treatment. For now, imagine that we could surgically intervene on practices — at least hypothetically, at some point in time, we can change precisely which statistical approach people use, without changing anything else. For example, we could get people to use propensity score matching in precisely those situations in which they used to use regression adjustment before.

Conditioning versus marginalizing over researchers’ skills

Even with a well-defined treatment, causal effects can be heterogeneous (what works for you and your research needn’t work for everybody else) and we need to consider how we want to deal with that when judging a statistical approach.

For example, researchers’ pre-existing skill level may vary, which may in turn affect whether and to which degree their work is improved if they use statistical approach A rather than B. For researchers at high skill levels, using propensity scores for third variable adjustment may afford them higher flexibility, resulting in better inferences as they can more readily model complex interactions. Researchers at a low skill level may fail to realize those benefits and actually end up making worse inferences because the complexity of the procedure distracts them and gives them the impression that something magical was happening, rather than third variable adjustment.

These are conditional causal effects, holding a third variable (researcher skill) constant at a given level (high, low). Evaluating the conditional causal effect at a low skill level is a good way to bash any new and sufficiently complex statistical approach; unskilled researchers will probably botch new and complex things. Evaluating the conditional causal effect at a high skill level is a good way to defend any new and complex statistical approach; skilled researchers will probably apply it well and arrive at good inferences.

Both of these can be true at the same time; which one should we consider more important in our overall judgment? If we’re afraid that bad science crowds out all the good stuff and wastes huge amounts of money, we may be more concerned about what happens at the low skill level. If we believe that actual scientific advancement only happens at the top level and nobody reads the bad stuff anyway, we may be more concerned about what happens at the high-skill level.[1]Adam Mastroianni writes about weak-link versus strong-link problems: Weak-link problems means that the overall quality depends on the quality of the worst stuff; strong link-problems means that the overall quality depends on the quality of the best stuff. He argues that science is a strong-link problem, which would imply that we should mostly care about what happens at the high-skill level. Simine Vazire points out that even if science is a strong-link problem, we’re in trouble if we can’t tell what’s strong vs. weak science.

More “agnostically”, we could simply average across researchers’ pre-existing skills in the population; thus weighting the conditional effects: if there are many more people who will apply this badly, we may end up at a negative effect despite a positive effect among a minority. This is akin to evaluating a marginal effect rather than a conditional effect.[2]In psychology, I sometimes encounter the misconception that when effects are heterogeneous, the average effect is wrong in some meaningful way. It is true that the average effect may not apply to any single individual; just like no single individual in a sample with an average of M = 100 may actually precisely score 100, even if the distribution is perfectly normal. But the average effect is still the average of the effects; nobody (I hope) says that the mean is wrong just because it does not always reflect anybody’s actual value. Maybe people are confused because in psychology, the implicit assumption is that the coefficient we estimate is indeed the effect for every single person; when they see evidence that this is not the case, their brain short-circuits. Maybe this has to do with statistical training focusing on factorial experiments, in which researchers can control the distribution of the factors. In case of interactions, the average effect of one factor will be a feature of the distribution of the other factor, which is a feature of the design and can be arbitrarily chosen by the experimenter. In the causal inference literature, the default assumption is that effects vary between individuals. From that angle, effects that average over one thing or another are often the best we can get; individual-level effect estimates would require within-subject experimentation with additional assumptions.

The problem is that we can’t know for sure how skills are distributed in the population.

The average skill level of course lies somewhere below the skill level of you, my dear reader. But where does it lie in absolute terms? If you think that most people struggle to grasp the basics of simple linear regression, you may think that adding structural equation modeling on top will do little to improve things. If you think that people already have a firm grasp on the methods they use, adding complexity and flexibility likely won’t hurt. Furthermore, intervening on statistical approaches may also have effects on subsequent skill level. Maybe if people start using some more complex approach, their skill level slowly catches up, and in the end, inferences get better over time and they lived happily ever after. So we can also evaluate causal effects at different points in time and arrive at different conclusions. An example where the time point of evaluation could make a difference is multiverse analysis/specification curve analysis: maybe the paper reporting the analysis itself doesn’t arrive at the best possible inferences (no positive effect on research quality here and now), but the statistical approach could improve researchers’ understanding of the role of various data analytic decisions (e.g., which ones are arbitrary vs which ones change the analysis target; positive effect on research quality some time in the future).

Questions about Research Questions

Apart from researcher skill, the effects of any statistical approach may vary by research question. Some statistical approaches already entail a certain research question. For example, if you use a predictive machine learning algorithm, you will address a predictive research question. So we could evaluate the effects of a certain statistical approach conditional on the matching research question: Will the predictive machine learning algorithm improve inferences when a predictive research question is of interest? Unfortunately, that’s not always how approaches are adopted. For example, researchers trying to address causal research questions may also try to apply the predictive machine learning algorithm, even though it’s not what they’re actually interested in. For those scenarios, the evaluation may come out less positive. Opponents of an approach may naturally focus on the effect of the statistical approach conditional on people applying it as mindlessly as possible; proponents may naturally focus on people applying it precisely where it is advantageous over the status quo. In practice, we are guaranteed to get a mix with some applications that are very sound and others that are very stupid and should somehow average over that.

Here again, things may change over time because the statistical approach affects the research questions people ask. I am fully convinced that this happens; if you’ve used predictive models to address causal questions for long enough, it may be easier to simply change your research questions to predictive ones. Whether that’s good or bad is a question for another blog post.

Precision surgery vs. begging people to finally change their ways, please 🥺

In reality, we cannot surgically intervene on research practices. We can at the very best suggest what people ought to do (maybe a bit forcefully during the review process) and decide what to pass on to the coming generations of researchers through teaching. These complex interventions will often deliberately target researchers’ skills as well — if your whole point is getting people to use approach X through this intensive tutorial, then evaluating the effect of X at the pre-intervention skill level seems moot. Because you don’t want people to apply X at their pre-intervention skill level, you want them to take the whole intervention and develop the skills to use X as well as possible. These complex interventions will also affect research questions. For example, I have written a lot about causal inference based on observational data; this may be taken as active encouragement for people to switch to causal research questions based on observational data, for which I have been criticized. That criticism has a point; I am certain some very bad studies drawing causal conclusions based on observational data were inspired by my work. (Of course, this itself is causal inference on observational data, so I feel vindicated regardless. Let’s hope my average effect on research quality is positive.)

The effects of the full-blown complex intervention will exert themselves through more convoluted paths. For example, if an approach X results in particularly sexy results, it may in the short term be taken up selectively by researchers who don’t care much for rigorous implementation. In the long term, that may enable those researchers’ careers to thrive, leaving less space for researchers who do better work, thus shifting the pool of researchers in the field toward a lower level of skills. That could, in principle, be true even if the hypothetical effect of a surgical intervention in which everyone in the original population adopted X on research quality was positive. Additionally, many things will come with opportunity costs. The time you spend writing down a preregistration is time that you cannot spend improving your research design in some other way; and so for some people,[3]who would never p-hack and who report everything transparently anyway preregistration may not only be “redundant at best” but actively preventing them from realizing the best possible research they can. Whether that is enough to render the average effect of preregistration negative is a different question.

Let a hundred flowers bloom (but skip the crackdown)

So we are in a situation in which we can evaluate a statistical approach in many different ways that can result in seemingly contradictory but fully compatible answers. On top of that, any evaluation will involve a number of unknown variables. What do researchers’ skills actually look like? Which research questions are they trying to tackle? We can only guess the answers based on information that is available to us, which will be a mix of our reading of the published literature and local information (“Everybody in my department is doing median splits, send help!”). The latter is very likely to be biased because we are not surrounded by a representative sample of our field of research. The former is also biased because the published literature is not representative of the average research that is being conducted; publication itself acts as a distorting selection filter. And how will things play out in practice, who will pick up what, and how? Again we can only guess based on our experience with how the world and people work.

I thus think it’s inevitable that people will disagree about the merits of various statistical approaches, even if there is high agreement about all facts that can be verified. People put higher weights on different types of effects (“sure, this would work if everybody was a saint, however…”) and have different guesstimates of both the status quo (e.g., researchers’ skills) and how things will work out in the long run (can researchers simply ignore misapplications of this approach when the resulting plots look so nice?).

There are some edge cases for which it’s easy to actually average over a lot of factors and still come out with a pretty solid assessment. If the math underlying a certain approach is just wrong in a meaningful way, it’s very unlikely that the net effect is positive. If the approach tackles a research question that elicits neither interest nor understanding, maybe just don’t (looking at you, MANOVA, keep on burnin). If the data requirements for valid inferences just won’t be met anytime soon and if things go haywire in unpredictable ways whenever requirements aren’t met, maybe just don’t.[4]“Error-free measurements of all variables contained in the system? At this time of year? At this time of day? In this part of the country, localized entirely within your dataset?”

But oftentimes there will be a lot of room for disagreement. And so much uncertainty at every step that I’m having a hard time coming down with strong opinions myself. That said,

FIGHT! FIGHT! FIGHT!

I still often enjoy watching from the sidelines. Things get a bit repetitive as one ages; I personally don’t need another season of Bayesians vs. Frequentists.[5]They should have ended after Reverend Bayes woke up to realize that he had not in fact mathematically proven the existence of God, but instead been in a coma, during which the love of his life eloped with a Frequentist. But somebody else may still profit. Because whenever I encounter a debate for the first time, I feel like there’s something to learn. Sometimes, it’s something about the relative strengths and pitfalls of various statistical approaches and how things can be botched at various skill levels. Sometimes, it’s something about people’s underlying expectations of how other researchers behave. Sometimes, it’s about underlying values, such as whether or not statisticians ought to be held accountable for misapplications of stats. Sometimes, it’s even about people’s beliefs about the role of science in society.

Sometimes there is educational value, sometimes there is at least entertainment value. But I can’t get very passionate myself.

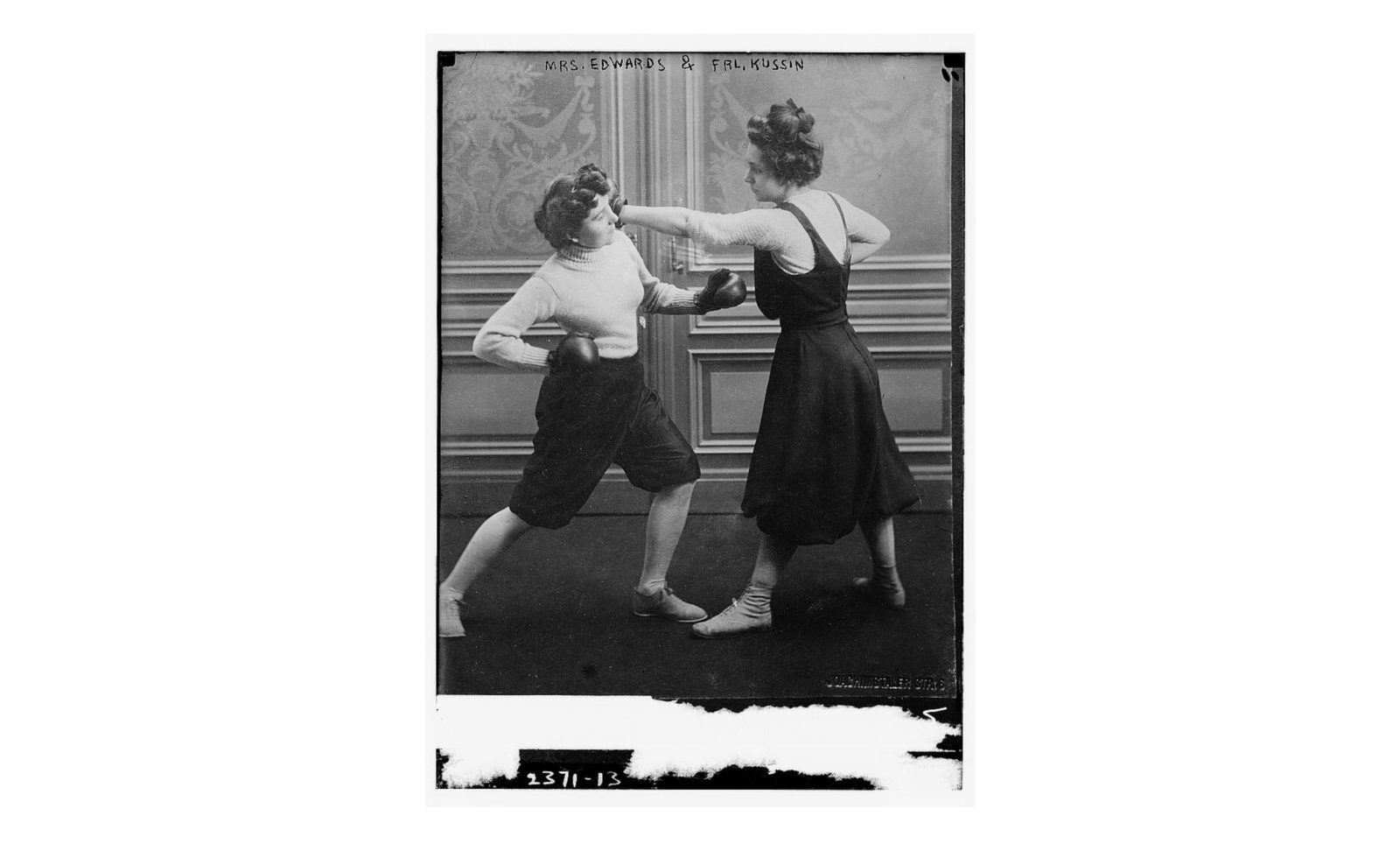

…UNLESS somebody says something against defining estimands and spelling out assumptions. If you want to do that, how about we go outside and settle this like emotionally stunted men.

Footnotes

| ↑1 | Adam Mastroianni writes about weak-link versus strong-link problems: Weak-link problems means that the overall quality depends on the quality of the worst stuff; strong link-problems means that the overall quality depends on the quality of the best stuff. He argues that science is a strong-link problem, which would imply that we should mostly care about what happens at the high-skill level. Simine Vazire points out that even if science is a strong-link problem, we’re in trouble if we can’t tell what’s strong vs. weak science. |

|---|---|

| ↑2 | In psychology, I sometimes encounter the misconception that when effects are heterogeneous, the average effect is wrong in some meaningful way. It is true that the average effect may not apply to any single individual; just like no single individual in a sample with an average of M = 100 may actually precisely score 100, even if the distribution is perfectly normal. But the average effect is still the average of the effects; nobody (I hope) says that the mean is wrong just because it does not always reflect anybody’s actual value. Maybe people are confused because in psychology, the implicit assumption is that the coefficient we estimate is indeed the effect for every single person; when they see evidence that this is not the case, their brain short-circuits. Maybe this has to do with statistical training focusing on factorial experiments, in which researchers can control the distribution of the factors. In case of interactions, the average effect of one factor will be a feature of the distribution of the other factor, which is a feature of the design and can be arbitrarily chosen by the experimenter. In the causal inference literature, the default assumption is that effects vary between individuals. From that angle, effects that average over one thing or another are often the best we can get; individual-level effect estimates would require within-subject experimentation with additional assumptions. |

| ↑3 | who would never p-hack and who report everything transparently anyway |

| ↑4 | “Error-free measurements of all variables contained in the system? At this time of year? At this time of day? In this part of the country, localized entirely within your dataset?” |

| ↑5 | They should have ended after Reverend Bayes woke up to realize that he had not in fact mathematically proven the existence of God, but instead been in a coma, during which the love of his life eloped with a Frequentist. |