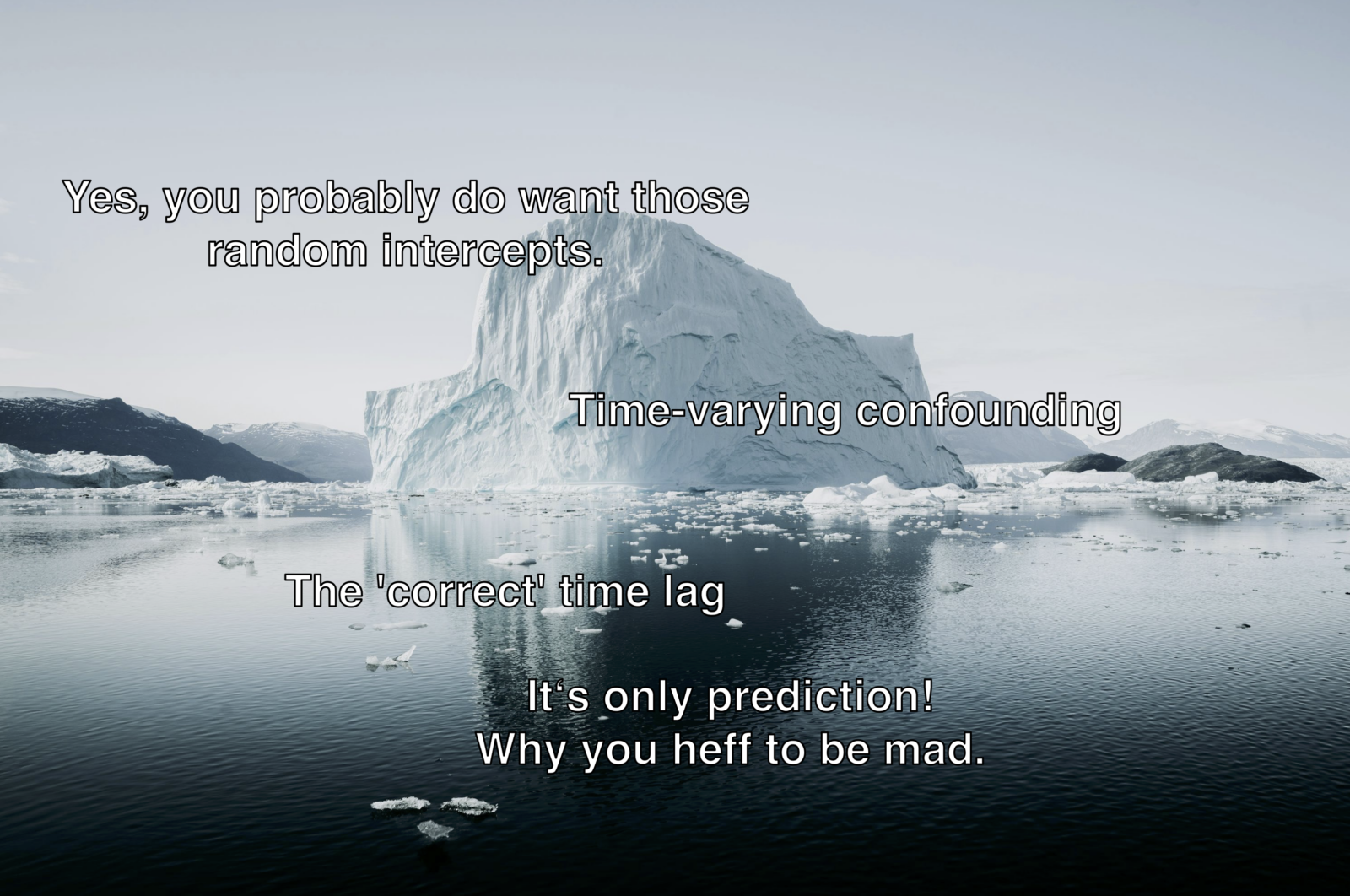

In some fields, researchers who end up with time series of two variables of interest (X and Y) like to…

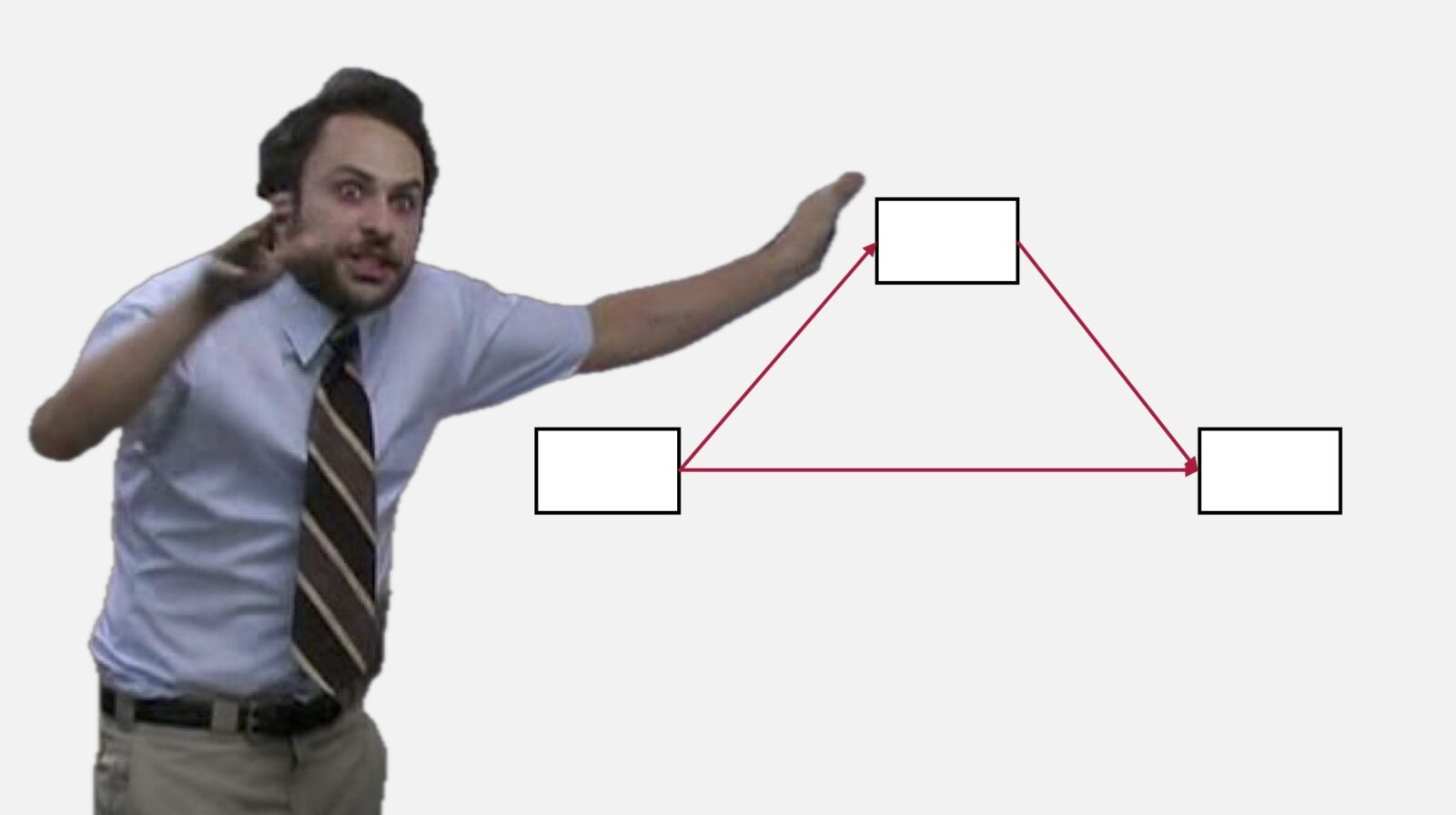

Mediation analysis has gotten a lot of flak, including classic titles such as “Yes, but what’s the mechanism? (Don’t expect…

For any central statistical analysis that you report in your manuscript, it should be absolutely clear for readers why the…

Reviewer notes are a new short format with brief explanations of basic ideas that might come in handy during (for…

A shibboleth is a custom, such as a choice of phrasing, that distinguishes one group of people from another. The…

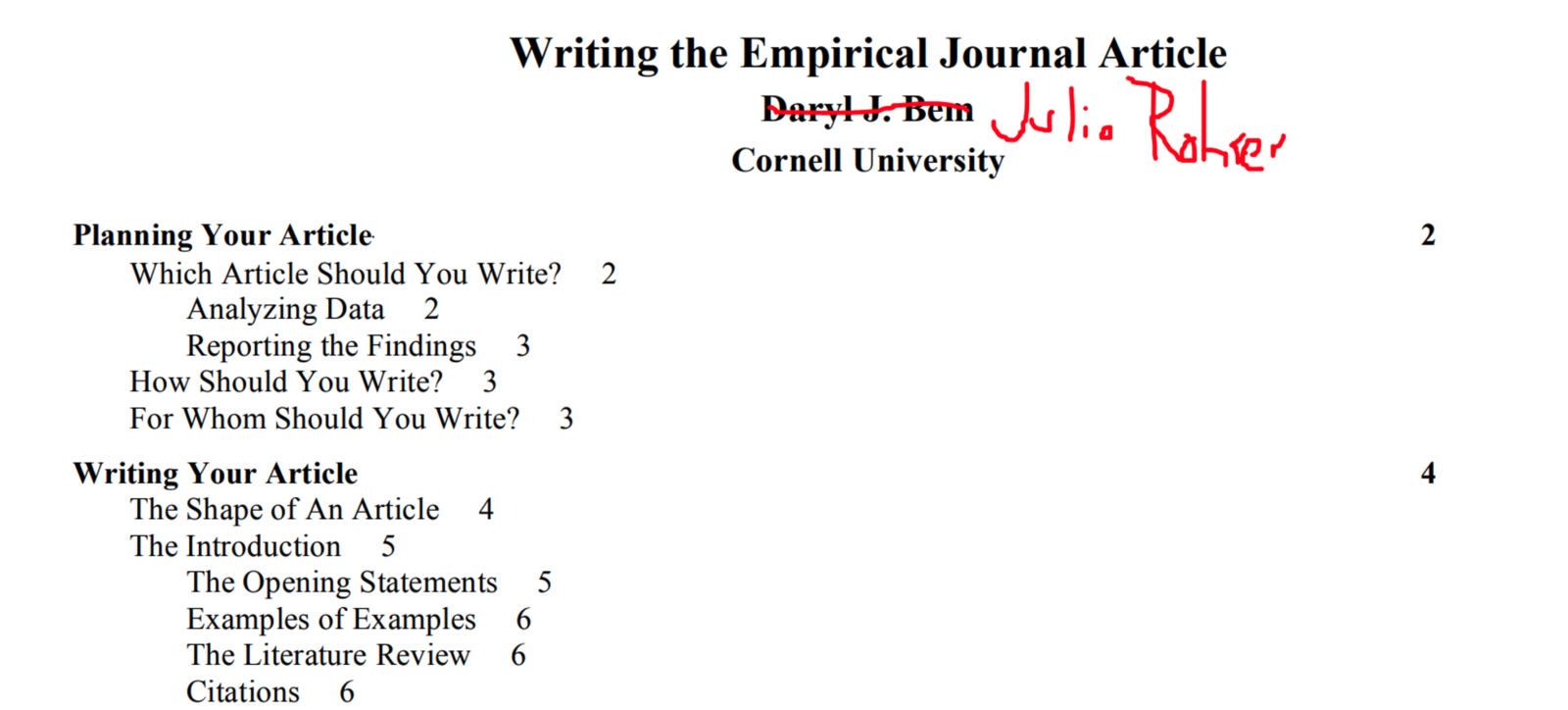

A wise man – I’m quite sure it was Brian Wansink – once pointed out that it is impossible to…

There have been persistent calls spurring psychologists to do more “idiographic” research, starting even before Peter Molenaar’s “Manifesto on Psychology…

Summer in Berlin – the perfect time and place to explore the city, take a walk in the Görli, go…

TL;DR: Tell your students about the potential outcomes framework. It will have (heterogeneous) causal effects on their understanding of causality…

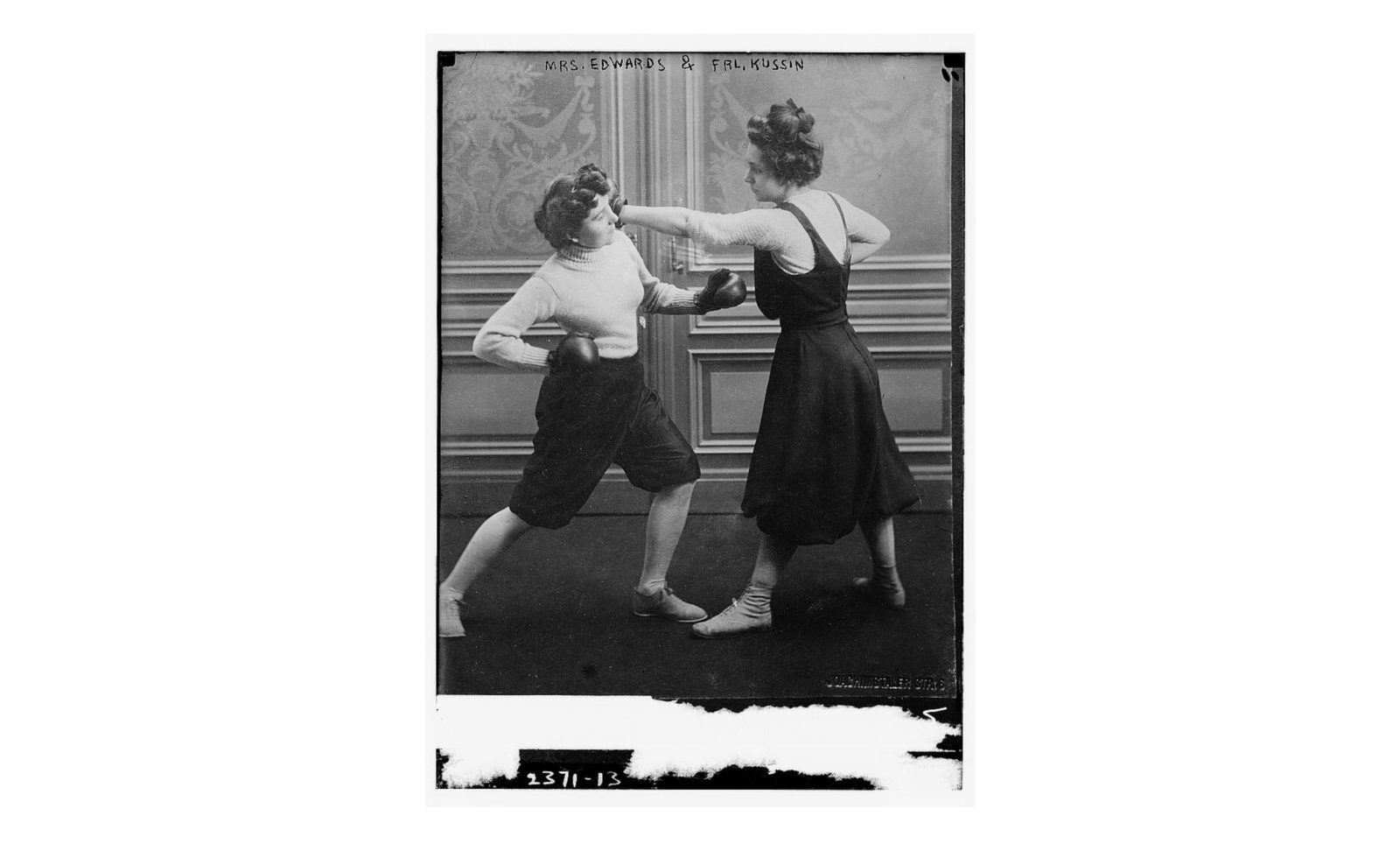

I don’t like getting into fights and sometimes I am concerned this keeps me from becoming a proper methods/stats person.…