If you read this blog, you probably know Malte Elson. If you know Malte, you probably know that he has a worrying obsession with hats once[1]A very, very long time ago. was a young and naïve man who thought that asking questions was a good idea when he and Patrick Markey came across an odd-looking paper, sending them on a mind-boggling odyssey (during which, among other things, their own universities had to decide whether asking questions was an ok thing to do). As far as we know, Patrick and Malte are back to being able to sleep at night, but other ripple effects are still unfolding. Today we are hosting a guest blog by Patrick, who tells us the most recent chapter of this story:

In 2014[2]The paper was published online first in April 2012, but didn’t appear in print until October 2014. Remember that the next time you’re complaining about publication lag! Dr. Brad Bushman and his former graduate student, Jodi Whitaker, published ‘Boom, Headshot!’: Effect of Video Game Play and Controller Type on Firing Aim and Accuracy in the journal Communication Research. The study purported to find that playing a violent video game with a realistic gun-shaped controller (similar to the one below) trained people to be better sharpshooters in real life.

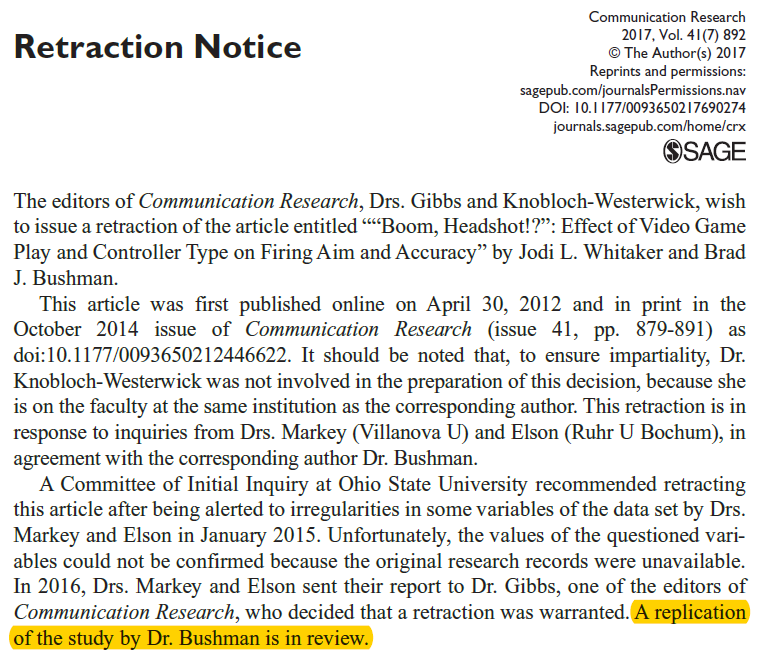

However, this controversial study was soon retracted after Dr. Malte Elson and I had discovered two different data files on Dr. Bushman’s computer between which the codes for variables appeared to have been switched. Additionally, the authors of the 2014 study were unable to provide the raw data in order to confirm which data file was correct. A formal investigation of the junior author was conducted (OSU decided not to formally investigate Dr. Bushman) and the original research article was eventually retracted (additional information and a full timeline of these events can be found here). In response to this retraction, Dr. Bushman recently published a replication of the retracted 2014 study, now titled, ‘Boom, Headshot!’: Violent First Person Shooter (FPS) Video Games that Reward Headshots Train Individuals to Aim for the Head When Shooting a Realistic Firearm in the journal Aggressive Behavior.

The Retracted 2014 Study

The 2014 study examined whether or not using a gun-shaped video game controller while playing video games “trained” players to shoot for the head of targets when using a realistic gun in real life. The only formal hypothesis in the 2014 study was:

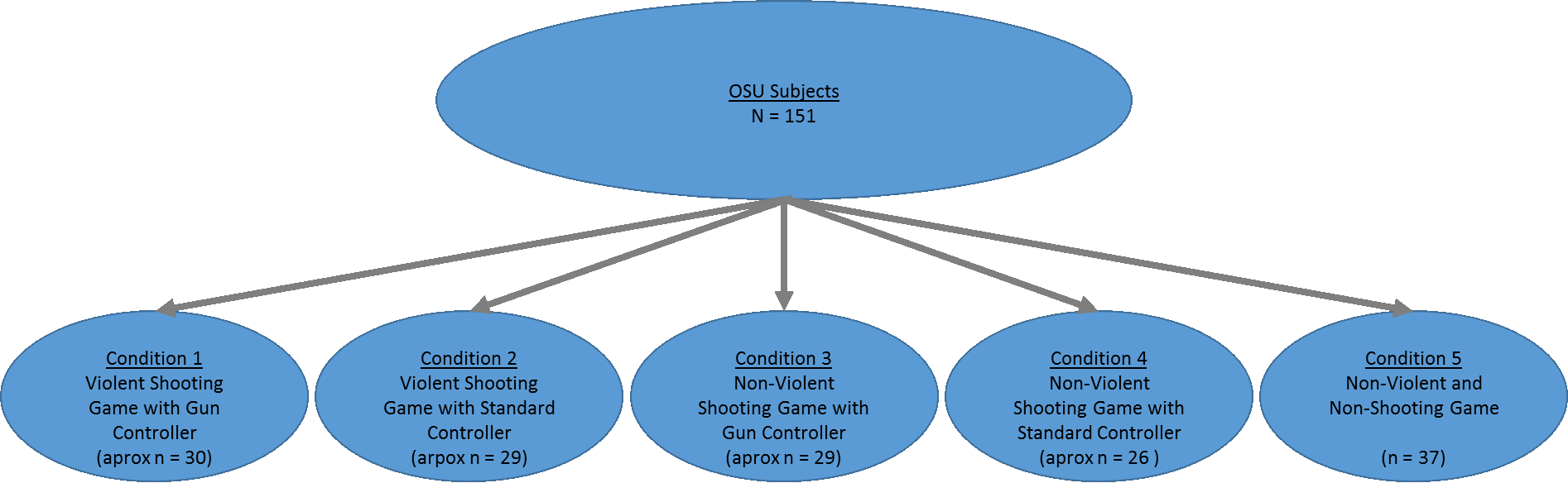

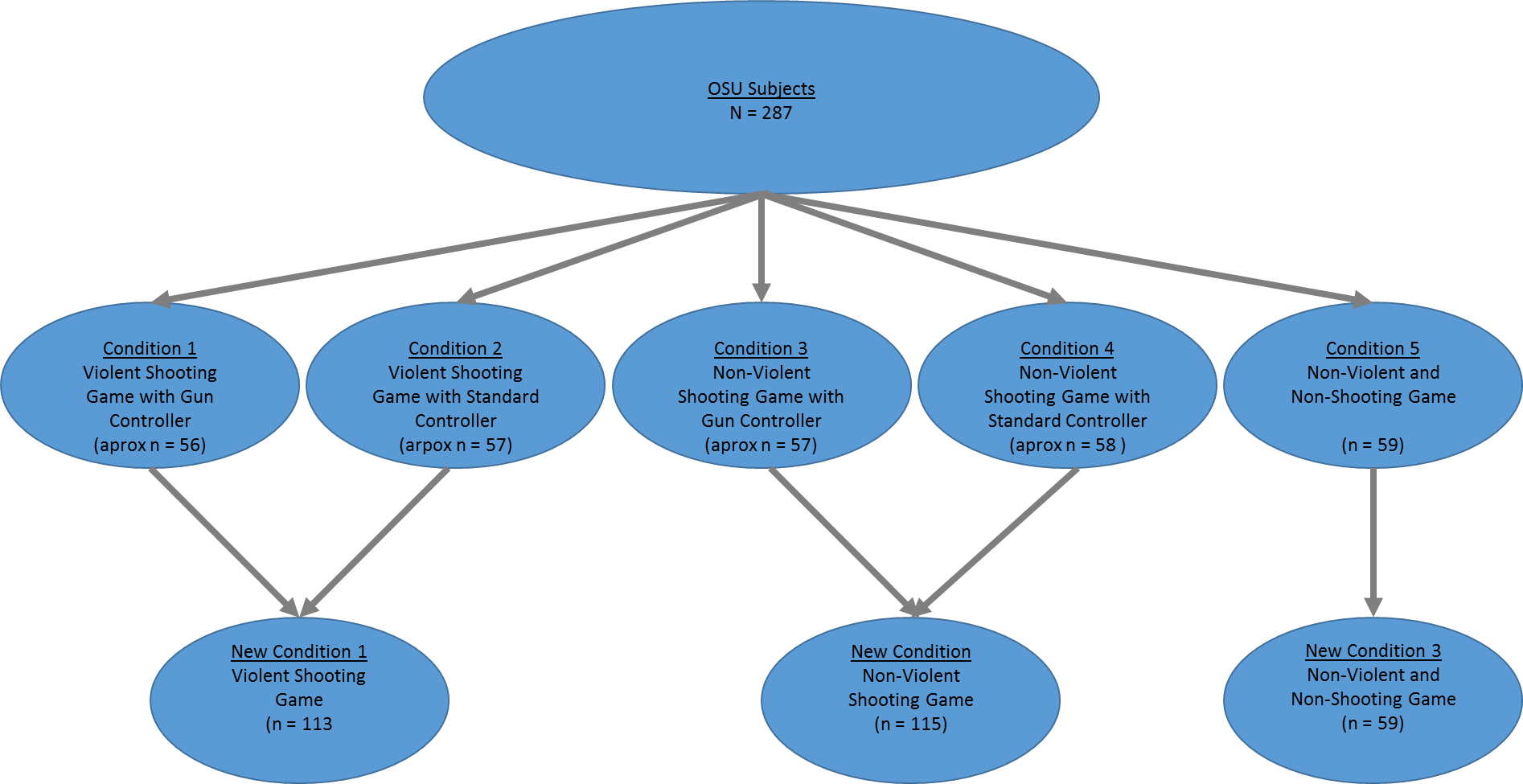

This hypothesis was examined by using a fairly simple 5-group design and participants were assigned to either

- a violent shooting game with a gun controller;

- a violent shooting game with a standard controller;

- a nonviolent shooting game with a gun controller;

- onviolent shooting game with a standard controller; or

- nonviolent non-shooting game.

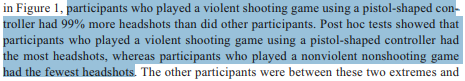

Results from the study found:

When the article was eventually retracted in December 2017 the retraction notice indicated:

Additionally, OSU released various statements at different times indicating that this study was being replicated and was under review in the journal of the retracted study.

It appears Communication Research eventually rejected the new 2018 “replication” study (as the 2018 study was eventually published in Aggressive Behavior). Sometime after being rejected from Communication Research, Dr. Bushman uploaded his study’s protocol to clinicaltrials.gov and indicated, again, that this new study was a replication of the previous-retracted study.

Finally, after the 2018 study was published in Aggressive Behavior, an endnote in the article explicitly notes that this is a replication of the 2014 study.

The New 2018 “Replication” Study

In the new 2018 study the author substantially altered the only formal hypothesis from the 2014 study. The new hypothesis is:

This is a dramatic change because it removed the importance of the “gun-shaped controller” which was the central element of the 2014 study’s hypothesis. This change in the hypothesis had the carry-on effect of the researcher merging various conditions together that were separate in the 2014 design. Specifically, as in the 2014 study, in the 2018 study participants were randomly assigned to one of the five video game conditions. However, unlike the 2014 study, in the 2018 study Dr. Bushman merged “violent shooting game with gun controller” and “violent shooting game with standard controller” together and renamed it “violent shooting game.” Additionally, the he merged “non-violent shooting game with gun controller” and “non-violent shooting game with standard controller” together and renamed it “nonviolent shooting game”.

Did the 2014 Findings Replicate in 2018?

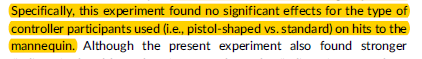

In an endnote the author provides a possible reason for why these groups were merged together and a new hypothesis was added in the 2018 study — contrary to the only hypothesis of the 2014 study which predicted that the greatest number of headshots would occur for participants who played a violent game with a gun-shaped controller — the 2018 study did not replicate the findings from the retracted 2014 study.

The author restates this again later in the same endnote.

It is important to remember that this means the 2018 study failed to replicate the only formal hypothesis of the 2014 study. However, in the same footnote Dr. Bushman indicates:

This claim seems questionable as the only formal hypothesis from the 2014 focused on the importance of controller type, which the author himself states did not replicate. The central importance of control type to the “main findings reported by Whitaker and Bushman” is further evident by the title of the 2014 article (the 2018 article removes mention of controller type from its title).

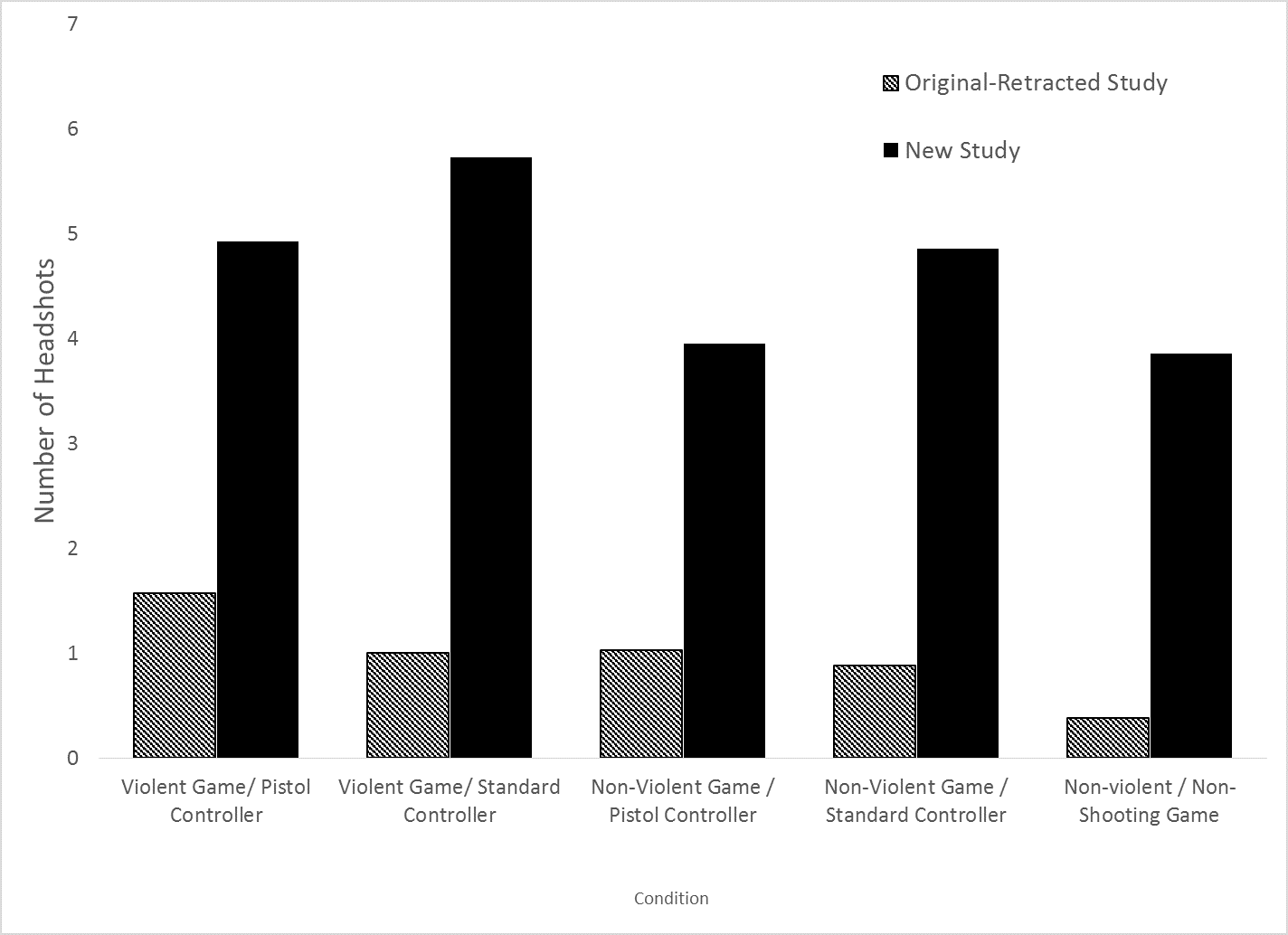

Although the 2018 study does not actually report the statistical findings of the original 5 groups — 1) a violent shooting game with a gun controller; 2) a violent shooting game with a standard controller; 3) a nonviolent shooting game with a gun controller; 4) nonviolent shooting game with a standard controller; or 5) nonviolent non-shooting game — Dr. Bushman recently uploaded the data that make it possible for readers to examine whether or not the new study replicated the findings of the previous-retracted study. The figure below displays the mean number of headshots found in the two studies.

In the 2014 data set, contrast codes testing the study’s only hypothesis (Condition 1 [violent shooting game with gun controller] versus the other four conditions) were significant (t(146) = 3.66, p < .001). In the 2014 study the authors point out this dramatic difference:

![]()

However, in the 2018 data set these contrast codes are no longer significant (t(282) = 1.31, p = .558) and, contrary to the 2014 study which found a significant 99% difference, the 2018 only found a non-significant 6% difference.

Regardless of the claims by the author, there seems little doubt that the 2018 study failed to replicate main findings of the 2014 study. One additional odd finding between the 2018 study and the 2014 study is the total number of headshots found in these two studies. In the 2014 study headshots were very unusual (M = .95; SD = 1.05) but in the 2018 study they occurred fairly regularly (M = 4.86; SD = 3.82; d = 1.39). This is an increase of 411% despite the fact that both studies reported using identical methodologies and despite repeated claims by Dr. Bushman that the 2018 study is an “exact replication” of the 2014 study. Interestingly, in the 2014 study only one person was able to get 5 headshots, but in the 2018 study 43% of the participants managed to get 5 or more headshots. The meaning of this dramatic difference is unclear. In the 2014 study data irregularities seemed to suggest that, sometime after data collection, condition codes were “switched” between subjects in the data file (e.g., subject 10’s condition code of 1 is switched with subject 143’s condition code of 2; see Markey & Elson, 2015 for more details). Exactly how such “switching” might have occurred is unclear. However, such an explanation does not seem consistent with the dramatic difference between headshots observed in the 2014 and 2018 studies. It is possible that data irregularities in the 2014 study were even more severe than previously expected.